- Welcome Guest

- Sign In

- Welcome Guest

- Sign In

In 2025, open-source technology will navigate growing challenges, from security and sustainability to funding. New AI projects may offer solutions, but uncertainty remains.

However, the open-source nature of AI development will continue to fuel concerns about its ethical and social implications, including the potential for misuse. Open-source AI models can be fine-tuned to remove safeguards, potentially leading to harmful applications.

Tensions between enterprises and vendors over transparency regarding OSS usage are lingering. Enterprises want greater transparency from vendors about the OSS components in their products. Without widespread mandates, organizations are left to manage OSS governance and security independently.

According to William Morgan, CEO of Buoyant, the developer of the Linkerd service mesh platform, this year will see the continued closure, defunding, and relicensing of open-source projects. He sees a renewed focus on open source’s sustainability and commercial viability, especially for critical infrastructure projects.

“Savvy adopters ask themselves how much can we truly rely on these projects to be around tomorrow? Finally, the resistance to discussing open source as anything other than an altruistic effort in selfless collaboration will start to erode as the economic realities of paying maintainers for a free product become increasingly obvious,” he told LinuxInsider.

Morgan’s view hints at a pending decline in open source’s underpinning structure. Other key open-source enterprise supporters take a similar stance on what may lie ahead for the open-source industry.

Ann Schlemmer, CEO of open-source database company Percona, fears that 2025 will signal the decline of open source as a business model. Her concerns stem from a year of reckoning in the open source space, marked by a collective community pushback against organizations and practices that undermine its foundational principles.

Two incidents exemplify this pushback: the coordinated launch of open source data store Valkey and the decision by cloud-based search AI platform Elastic to re-adopt the open source model.

“The open source community has put vendors on notice that they are still very much a force to be reckoned with,” Schlemmer told LinuxInsider.

With 2024 dubbed the year open source struck back, Schlemmer predicts 2025 will be the year open source ceases to gain traction as a business model. She cites antics like “the open-source bait and switch” — where organizations leverage open-source licensing to drive adoption, only to switch to more restrictive licenses once they want to cash in — as becoming a thing of the past this year.

“Because of such practices, more people will realize that single-vendor support for popular OSS projects is an inherently problematic model with a waning shelf-life. Moving forward, I believe community-supported projects and those backed by community or foundation-supported projects will become the standard for OS initiatives while single-entity OS projects will fall out of favor,” she predicted.

The Open Source Initiative has been the de facto steward of all things open source for decades. It has been working to uphold a standardized definition of open-source AI. However, Schlemmer observed that with the recent explosion in AI, the waters around what is and isn’t open source have become muddier than ever before.

In response, the OSI published its first standardized definition of open-source AI in late October. Nevertheless, despite more than two years of research and development — and a growing number of industry endorsements — consensus around the definition still does not exist, she complained.

“That is why I believe we’re only at the beginning of this extremely complex and thorny pursuit. In the year ahead, I expect we will see even more discussion and debate around the topic, with open-source idealists, pragmatists, and vendors alike weighing in on what it means to be open source in the age of AI,” Schlemmer said.

Open-source innovations will make legacy database technologies better suited for evolving data needs, according to Schlemmer. Unlike many other areas of the tech ecosystem, the database landscape continues to be dominated by legacy technologies, with decades of use and development behind them, she reasoned.

“Market leaders such as MySQL and PostgreSQL continue to show the value of a proven, trustworthy tool when it comes to handling one’s data. However, the changing nature and needs of today’s data layer will necessitate the evolution of these technologies,” she said.

Through community-driven innovation, Schlemmer contends that evolving solutions with new versions, capabilities, extensions, and integrations will be introduced at an ever-increasing rate. In 2025, a stream of such innovations will be introduced to meet the market’s changing needs.

The volume, variety, and utilization of open-source software and components have been on the rise for well over a decade. In fact, over 90% of organizations already use at least one form of open-source software in their tech stacks.

With the emergence of AI and a renewed focus on enterprise efficiency acting as the primary drivers, Schlemmer believes 2025 will be a watershed year for the open-source ecosystem. In its tenth annual State of the Software Supply Chain report, Sonatype estimated that 2024 saw the largest single annual increase in open-source software consumption to date.

The credit for that — at least in part — is due to the growing need for organizations to tighten purse strings and streamline tech stacks. The good news is that from 2022 to 2024, the total number of available open-source projects increased by roughly 20% yearly.

“So, with demand soaring, innovation keeping pace, and no real sign of either slowing, I believe in 2025, open-source technology will be a truly momentous year for open source,” Schlemmer predicted.

The new year is seeing the first real community engagement in open-source build and testing tools that are expected to grow. Two companies spearheading this surge have common ground.

One is EngFlow, founded by ex-Googlers who created the open-source build system tool Bazel. The other is Meta’s open-source version, Buck2.

“We see some interesting things on the horizon for open source as it relates to developer build systems and remote execution in 2025,” EngFlow’s CEO and co-founder Helen Altshuler told LinuxInsider.

She added that this is important to watch in the coming months and years. Developers and platform engineers are challenged to build code and test faster and more cost-effectively than ever because of the bigger code bases resulting from organic growth, open-source usage, and increasing AI-generated code. Bazel and Buck2 improve the developer experience with their modern approach to dependency management, code hermeticity, and parallelization, she explained.

“While many open-source tools and platforms today are hosted and governed by neutral nonprofit foundations, Bazel and Buck2 continue to be largely influenced by their original corporate sponsors, but we see that changing,” Altshuler observed.

She anticipates this year will further the shift toward a more extensive community-driven approach for both projects. EngFlow’s collaboration with Google and other partners within a Bazel working group under The Linux Foundation was a significant milestone.

This collaboration included transferring community-maintained Bazel repositories to the Linux Foundation’s GitHub organization, bazel-contrib, signaling a strong commitment to fostering a more inclusive and community-driven ecosystem for Bazel development.

Linux desktop developers may see an increase in adoption in 2025 despite the lack of commercial marketing strategies hawking the benefits of running Linux on consumer computers.

The arrival of the New Year brings a crucial decision for millions of computer users worldwide. In just 10 months, Microsoft will end support for Windows 10, leaving users with three options: upgrade to Windows 11 (if eligible), pay steep subscription fees for extended security support, or explore alternative computing solutions.

Microsoft hopes many Windows 10 users will reluctantly purchase new, more powerful devices to meet Windows 11’s upgrade requirements. For those unwilling to do so, the search for alternatives begins.

Switching to Linux offers both convenience and cost savings. Upgrading to Linux is free, and you can install it on your existing hardware to replace Windows.

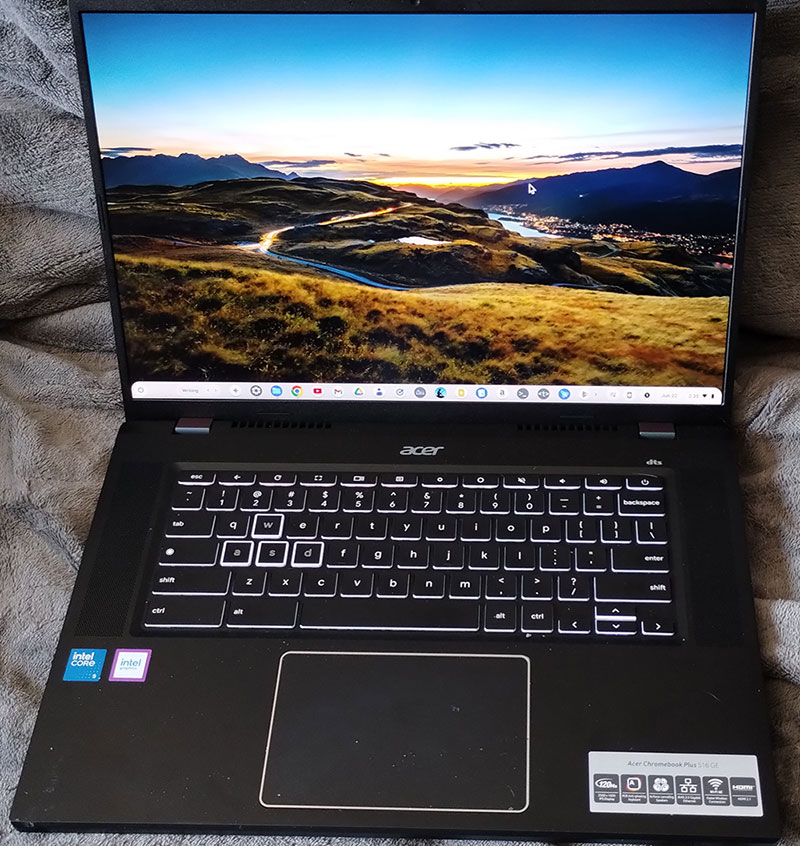

Options for stranded Windows 10 users include switching to costlier macOS-based devices or adopting Chromebooks with ChromeOS, a less expensive, cloud-focused ecosystem. Another proven alternative is letting Linux breathe new life into existing hardware with a free, customizable operating system.

With the Windows 10 security updates and support deadline looming on Oct. 4, 2025, Linux developers see a potential for Windows users to switch, potentially marking 2025 as “The Year of the Linux Desktop.” According to a recent Forbes article, “nearly 900 million Windows 10 users are still holding out,” presenting a significant opportunity for Linux adoption.

These potential Linux converts will be joined by existing Linux users exploring upgrade options to other distributions. Unlike Microsoft and Apple platforms, Linux is not a single operating system. Instead, it offers dozens of highly configurable distributions with unique features and desktop environments, providing users with unparalleled customization options.

Its diverse ecosystem offers options tailored to various needs, giving users unparalleled flexibility to shape their computing experience. Special tools even let die-hard Windows fans run their favorite Microsoft applications inside the Linux desktop.

One of my favorite Linux discovery sites was DistroTest.net, which shut down several years ago. It was a novel way to sample dozens of Linux desktops and distros from a browser tab. I could select from some 300 Linux distros running on a virtual machine (VM) the site hosted without installing or configuring anything.

Several websites offer similar capabilities using emulators. These sites run software programs that allow a computer system to mimic the functions of another system. These online tools simplify the journey of Windows 10 users hesitant to upgrade and Linux users eager to try something new. They offer ease of use when exploring the range of Linux distributions, from beginner-friendly options to advanced environments.

Setting up and using emulators locally on your computer is a drawback to adoption for inexperienced computer users. However, a few user-friendly options let you explore free, fully functional Linux operating systems without leaving your web browser in whatever other computer platform you use.

Although these websites’ Linux inventories are less extensive, they let you sample Linux without installing it on your machine. This approach gives potential new users an easier way to test drive Linux distros in their web browsers without downloading and configuring files. All you need is an internet connection and a web browser.

Unfortunately, no genuinely adequate replacement for DistroTest.net exists. Linux developers lack a commercial marketing mechanism to drive adoption, as no company owns the Linux OS. If developers offered an emulator tool on their websites, potential adopters could experience the Linux version more easily before downloading and installing it on their computers.

DistroSea hosts more than 50 Linux distros, offering a beginner-friendly way to explore options. When the website loads in your browser window, scroll through the installed options and click on a distro to try.

DistroSea requires just a few clicks to load Pop!OS Linux into a web browser window.

OnWorks offers a more limited selection of Ubuntu, Parrot Security OS, Elementary OS, and Fedora Linux distros. It also lets you try out the Wine app to run Windows programs in Linux, Kodi Media Center, and a Windows and Mac emulator. However, the site is plastered with ads, which mar the experience.

This OnWorks view shows Elementary OS Linux running in a web browser.

LabEx offers a different user experience. It connects you to an online Linux terminal and a playground environment with a user-friendly interface for interacting with a complete Ubuntu 22.04 environment. It is free to use, but you must create an account to get started. LabEx offers multiple interfaces and integration with structured courses that create a solid platform to learn about using Linux. However, it lacks access to any other Linux distributions to try.

LabEx lets you run Linux from any web browser and learn various Linux skills, all with the click of buttons.

JSLinux is a web service on Bellard.org that runs Linux and Windows VMs for free. It runs the virtualization software remotely. Click the startup link from the menu box to choose either Alpine Linux or Fedora Workstation Linux.

Bellard’s JSLinux service shows the Dillo web browser running within Alpine Linux through a web-based connection.

Whether you’re exploring Linux for the first time or looking to expand your expertise, Linux offers unparalleled flexibility and functionality. Start by sampling distributions online and see how Linux can elevate your computing experience.

See our other articles for more information about selecting Linux distros and options for buying new computers with Linux pre-installed. Also, check out Insider Tips for Buying a New Personal Computer.

Enterprise Linux users face growing risks from software vulnerabilities, especially given their widespread reliance on open-source code in Linux applications and commercial software.

Live kernel patching minimizes the need for organizations to take down servers, reboot systems, or schedule disruptive maintenance windows. While these challenges are significant, live patching offers a practical solution to reduce downtime and improve operational efficiency.

Besides applying kernel patches, keeping up with security patches of known and newly discovered software vulnerabilities is a worsening problem for all computing platforms. The process is critical with the heavy integration of open-source code used in both Linux applications and commercial software.

According to Endor Labs’ Dependency Management Report released in September, security patches have a 75% chance of breaking an application. It reveals the dangers of emerging trends in open-source security and dependencies from third-party software components. Linux is used across industries to run organizations’ backend servers and applications.

These operations require better software development lifecycle security strategies, noted researchers. They found that managing open-source dependencies is impossible with public advisory databases alone. Fixing known code issues is either ignored or put off indefinitely. Studies show that a median delay of 25 days occurs between public patch availability and publication for 69% of vulnerabilities.

“The patching process is highly disruptive. When you need to update something, you have to take down the service, or you have to take down the entire server. If anything is visible to the end user, it is a problem. It requires scheduling, acceptance by all the stakeholders, and everybody signing off on it being at the right time,” Joao Correia, cybersecurity expert at TuxCare, told LinuxInsider.

TuxCare offers automated vendor-neutral enterprise Linux security patching with zero downtime and no required reboots. Its KernelCare solution supports multiple Linux distributions commonly used in organizations, providing flexibility across different environments.

According to Correia, live patching strategies were first developed in 2006. Various commercial-grade Linux server distributions such as Oracle, Red Hat, and Canonical’s Ubuntu have their own live patching services.

Organizations are good at adopting new technologies but not so good at changing how they handle software patching, he noted. The IT industry still does traditional patching as if nothing has changed in the past 30 years of computing.

Wrong approach, he warned. Nothing about our technology stack and the architecture of the things that we use is the same. We insist on shoehorning traditional patching into everything, he complained.

“The real problem is not live patching. The real problem is changing people’s mindset towards live patching. It is more than proven that it works,” Correia said.

The Endor Labs report supports his conviction that live patching needs to be adopted more widely. For example, across six ecosystems, 47% of advisories in public vulnerability databases do not contain any code-level vulnerability information. Without this, it is impossible for organizations to establish whether known vulnerable functions can be exploited in their applications.

For instance, updating the top 20 open-source components to non-vulnerable versions in the Python ecosystem would remove more than 75% of all vulnerability findings. For Java, it would remove 60% of the vulnerabilities.

The Node Package Manager or NPM, an open-source repository of tools engineers use to develop applications and websites, would remove 44% of vulnerabilities. Phantom dependencies, which are invisible to security tools, account for 56% of reported library vulnerabilities.

Endor Lab researchers also found that 95% of open-source dependency version upgrades have the potential to break applications.

Correia asserted that live patching solves those issues and a whole lot more and is more than proven to secure your system much faster than traditional patching.

“It is non-disruptive, and there is still this unwillingness to change your methodology because it will impact your methodology. It will impact it in a good way. You do not need maintenance windows anymore,” he explained.

In some quarters, even IT experts are hesitant — or prevented — from adopting live patching. The new process is different from what they have done all these years and from what they were likely taught when they started the job.

“How do you overcome that? Well, we try to scream out from the highest hill we can find every single day. It has to be education,” he offered.

What exasperates Correia is the needless security breaches that occur because live patching is not universally applied. When an unpatched vulnerability impacts millions of servers worldwide, the problem could have been prevented or fixed within three or four minutes of its being made public.

“You could have had the patches applied, updating your systems within a couple of minutes after this was disclosed so none of your systems needed to be affected. You did not need to suffer a breach because of it,” Correia lamented.

This urgency touches on all computing levels with the caveat that both academia and government are more susceptible to this problem. U.S. government regulations now impose stricter security guidelines on government agencies.

Within academia, however, clever students and internal workers love to test their skills and try to breach systems on campus. Correia knows that firsthand from years of working as a systems administrator for universities. Other industries have their own compliance issues to meet.

TuxCare achieves live patching through a four-step process that begins with monitoring announcements for new vulnerabilities affecting the Linux kernel:

“Usually, the fixes are simple. Either it is off by one mistake, or a balance check that is missed, or it is a variable used when it has been freed. Basic issues that can be fixed very easily with one or a couple of lines of code,” Correia explained.

TuxCare’s code engineers look for the differences before and after the fix is deployed. They apply those differences to other Linux server distros with the same configurations.

Correia noted that live kernel patching on Linux servers is much different from doing a live patch on the consumer end for a desktop Linux distribution. That big difference is the necessity of live patching with servers.

Linux desktops receive regular security patches and system updates, either on a rolling basis or according to a recurring schedule, depending on the distribution. Some systems, like Ubuntu and Linux Mint, even provide kernel updates and fixes without waiting for a new distribution release.

Unlike server environments, rebooting desktops for updates is not a significant issue. Desktop reboots typically complete within a few minutes, minimizing disruption.

“There is a difference between a patch and an update. An update is a new, more minor version of a package. It can contain bug fixes, performance improvements, new features, edits at the command line, and other enhancements,” Correia explained.

A patch is a partial snippet of code that fixes a vulnerability in the existing version. These patches fix vulnerabilities without latency so that the existing implementation can run more securely and system administrators can hold off on rebooting until the next regular maintenance window, he added.

Some software developers disagree with the open-source community on licensing and compliance issues, arguing that the community needs to redefine what constitutes free open-source code.

The term “open washing” has emerged, referring to what some industry experts claim is the practice of AI companies misusing the “open source” label. As the artificial intelligence rush intensifies, efforts to redefine terms for AI processes have only added to the confusion.

Recent accusations that Meta “open washed” the description of its Llama AI model as true open source fueled the latest volley in the technical confrontation. Some in the industry, like Ann Schlemmer, CEO of open-source database firm Percona, have suggested that open-source licensing be replaced with a “fair source” designation.

Schlemmer, a strong advocate for adherence to open-source principles, expressed concern over the potential misuse of open-source terminology. She wants clear definitions and guardrails for AI’s inclusion in open source that align with understanding the core principles of open-source software.

“What does open-source software mean when it comes to AI models? [It refers to] the code is available, here’s the licensing, and here’s what you can do with it. Then we are piling on AI,” she told LinuxInsider.

The use of AI data is being mixed in as if it were software, which is where the confusion within the industry originates.

“Well, the data is not the software. Data is data. There are already privacy laws to regulate that use,” she added.

The Open Source Initiative (OSI) released an updated definition for open-source AI systems on Oct. 28, encouraging organizations to do more instead of slapping the “open source” term on AI work. OSI is a California-based public benefit corporation that promotes open source worldwide.

In a published interview elsewhere, OSI’s Executive Director Stefano Maffulli said that Meta’s labeling of the Llama foundation model as open source confuses users and pollutes the open-source concept. This action occurs as governments and agencies, including the European Union, increasingly support open-source software.

In response, OSI issued the first version of Open Source AI Definition 1.0 (OSAID) to define what qualifies as open-source software more explicitly. The document follows a year-long global community design process. It offers a standard for community-led, open, and public evaluations to validate whether an AI system can be deemed open-source AI.

“The co-design process that led to version 1.0 of the Open Source AI Definition was well-developed, thorough, inclusive, and fair,” said Carlo Piana, OSI board chair, in the press release.

The new definition requires open source models to provide enough information to enable a skilled person to use training data to recreate a substantially equivalent system using the same or similar data, noted Ayah Bdeir, lead for AI strategy at Mozilla, in the OSI announcement.

“[It] goes further than what many proprietary or ostensibly open source models do today,” she said. “This is the starting point to addressing the complexities of how AI training data should be treated, acknowledging the challenges of sharing full datasets while working to make open datasets a more commonplace part of the AI ecosystem.”

The text of the OSAID v.1.0 and a partial list of the global stakeholders endorsing the definition are available on the OSI website.

Schlemmer, who did not participate in writing OSI’s open-source definition, said she and others have concerns about the OSI content. OSAID does not resolve all the issues, she contended, and some content needs to be backtracked.

“Clearly, this is not said and done right, even by their own admitting. That reception has been overwhelming, but not in the positive sense,” Schlemmer added.

She compared the growing practice of loosely referring to something as an open-source product to what occurs in other industries. For example, the food industry uses the words “organic” or “natural” to suggest an assumption of a product’s contents or benefit to consumers.

“How much [of labeling a software product open source] is a marketing ploy?” she questioned.

Open-source supporters often boast about how the technology is deployed globally. Only rarely is an issue cited about license enforcement issues.

Schlemmer admitted that economic pressures drive changes in open-source licenses. It often becomes a balancing act between sharing free open-source code and monetizing software development.

For example, companies like MongoDB, her own Percona, and Elastic have adapted their licensing strategies to balance commercial interests with open-source principles. In these cases, license violations or enforcement were not involved.

“Several tools exist in the ecosystem, and compliance groups in corporate departments help people be compliant. Particularly in the larger organizations, there are frameworks,” said Schlemmer.

Individual developers may not recognize all those nuances. However, many license changes are based on determining the economic value of the project’s original owner.

Schlemmer is optimistic about the future of open source. Developers can build upon open-source code without violating licenses. However, changes in licensing can limit their ability to monetize.

These concerns highlight the potential erosion of open-source adoption due to license changes and the need for ongoing vigilance. She cautioned that it will take continuous evolution of open-source licensing and adaptation to new technologies and market pressures to resolve lingering issues.

“We must keep going back to the core tenet of open-source software and be very clear as to what that means and doesn’t mean,” Schlemmer recommended. “What problem are we trying to solve as technology evolves?”

Some of those challenges have already been addressed, she added. We have a framework for the open-source definition with clear labels and licenses.

“So, what’s this new concept? Why does what we already have no longer apply when we reference back?”

That is what needs to be aligned.

AI-driven systems have become prime targets for sophisticated cyberattacks, exposing critical vulnerabilities across industries. As organizations increasingly embed AI and machine learning (ML) into their operations, the stakes for securing these systems have never been higher. From data poisoning to adversarial attacks that can mislead AI decision-making, the challenge extends across the entire AI/ML lifecycle.

In response to these threats, a new discipline, machine learning security operations (MLSecOps), has emerged to provide a foundation for robust AI security. Let’s explore five foundational categories within MLSecOps.

AI systems rely on a vast ecosystem of commercial and open-source tools, data, and ML components, often sourced from multiple vendors and developers. If not properly secured, each element within the AI software supply chain, whether it’s datasets, pre-trained models, or development tools, can be exploited by malicious actors.

The SolarWinds hack, which compromised multiple government and corporate networks, is a well-known example. Attackers infiltrated the software supply chain, embedding malicious code into widely used IT management software. Similarly, in the AI/ML context, an attacker could inject corrupted data or tampered components into the supply chain, potentially compromising the entire model or system.

To mitigate these risks, MLSecOps emphasizes thorough vetting and continuous monitoring of the AI supply chain. This approach includes verifying the origin and integrity of ML assets, especially third-party components, and implementing security controls at every phase of the AI lifecycle to ensure no vulnerabilities are introduced into the environment.

In the world of AI/ML, models are often shared and reused across different teams and organizations, making model provenance — how an ML model was developed, the data it used, and how it evolved — a key concern. Understanding model provenance helps track changes to the model, identify potential security risks, monitor access, and ensure that the model performs as expected.

Open-source models from platforms like Hugging Face or Model Garden are widely used due to their accessibility and collaborative benefits. However, open-source models also introduce risks, as they may contain vulnerabilities that bad actors can exploit once they are introduced to a user’s ML environment.

MLSecOps best practices call for maintaining a detailed history of each model’s origin and lineage, including an AI-Bill of Materials, or AI-BOM, to safeguard against these risks.

By implementing tools and practices for tracking model provenance, organizations can better understand their models’ integrity and performance and guard against malicious manipulation or unauthorized changes, including but not limited to insider threats.

Strong GRC measures are essential for ensuring responsible and ethical AI development and use. GRC frameworks provide oversight and accountability, guiding the development of fair, transparent, and accountable AI-powered technologies.

The AI-BOM is a key artifact for GRC. It is essentially a comprehensive inventory of an AI system’s components, including ML pipeline details, model and data dependencies, license risks, training data and its origins, and known or unknown vulnerabilities. This level of insight is crucial because one cannot secure what one does not know exists.

An AI-BOM provides the visibility needed to safeguard AI systems from supply chain vulnerabilities, model exploitation, and more. This MLSecOps-supported approach offers several key advantages, like enhanced visibility, proactive risk mitigation, regulatory compliance, and improved security operations.

In addition to maintaining transparency through AI-BOMs, MLSecOps best practices should include regular audits to evaluate the fairness and bias of models used in high-risk decision-making systems. This proactive approach helps organizations comply with evolving regulatory requirements and build public trust in their AI technologies.

AI’s growing influence on decision-making processes makes trustworthiness a key consideration in the development of machine learning systems. In the context of MLSecOps, trusted AI represents a critical category focused on ensuring the integrity, security, and ethical considerations of AI/ML throughout its lifecycle.

Trusted AI emphasizes the importance of transparency and explainability in AI/ML, aiming to create systems that are understandable to users and stakeholders. By prioritizing fairness and striving to mitigate bias, trusted AI complements broader practices within the MLSecOps framework.

The concept of trusted AI also supports the MLSecOps framework by advocating for continuous monitoring of AI systems. Ongoing assessments are necessary to maintain fairness, accuracy, and vigilance against security threats, ensuring that models remain resilient. Together, these priorities foster a trustworthy, equitable, and secure AI environment.

Within the MLSecOps framework, adversarial machine learning (AdvML) is a crucial category for those building ML models. It focuses on identifying and mitigating risks associated with adversarial attacks.

These attacks manipulate input data to deceive models, potentially leading to incorrect predictions or unexpected behavior that can compromise the effectiveness of AI applications. For example, subtle changes to an image fed into a facial recognition system could cause the model to misidentify the individual.

By incorporating AdvML strategies during the development process, builders can enhance their security measures to protect against these vulnerabilities, ensuring their models remain resilient and accurate under various conditions.

AdvML emphasizes the need for continuous monitoring and evaluation of AI systems throughout their lifecycle. Developers should implement regular assessments, including adversarial training and stress testing, to identify potential weaknesses in their models before they can be exploited.

By prioritizing AdvML practices, ML practitioners can proactively safeguard their technologies and reduce the risk of operational failures.

AdvML, alongside the other categories, demonstrates the critical role of MLSecOps in addressing AI security challenges. Together, these five categories highlight the importance of leveraging MLSecOps as a comprehensive framework to protect AI/ML systems against emerging and existing threats. By embedding security into every phase of the AI/ML lifecycle, organizations can ensure that their models are high-performing, secure, and resilient.

Not all Linux distributions provide platforms for enterprise and non-business adopters. Red Hat Enterprise Linux (RHEL) and the Fedora Project let users keep their Linux computing all in the family.

Both the enterprise and community versions have upgraded over the last few weeks. RHEL is a commercial distribution available through a subscription and does not rely solely on community support. On the other hand, Fedora Linux is a free distro supported and maintained by the open-source community. In this case, Red Hat is the Fedora Project’s primary sponsor.

However, independent developers in the Fedora community also contribute to the project. Often, Fedora Linux is a proving ground for new features that ultimately become part of the RHEL operating system.

While Fedora caters to developers and enthusiasts, RHEL focuses on delivering enterprise-grade solutions. What’s the difference? Each edition caters to the needs of users’ business or consumer goals. Of course, using Fedora comes at a great price: it is free to download.

On Nov. 13, Red Hat released Red Hat Enterprise Linux 9.5 with improved functionality in deploying applications and more effectively managing workloads across hybrid clouds while mitigating IT risks from the data center to public clouds to the edge. Matt Miller, the Fedora Linux project leader, announced the release of Fedora 41 on Oct. 29.

According to global market intelligence firm IDC, organizations struggle to strike a balance between maintaining their Linux operating system environments and their workloads, which are hampered by time and resource constraints. The proliferation of the cloud and next-generation workloads such as AI and ML worsen their computing productivity.

RHEL standardization increased the agility of IT infrastructure administration management teams by consolidating OSes, automating highly manual tasks such as scaling and provisioning, and decreasing the complexity of deployments. As a result, infrastructure teams spent 26% more time on business and infrastructure innovation, Red Hat noted.

RHEL 9.5 delivers enhanced capabilities to bring more consistency to the operating system underpinning rapid IT innovations. This impacts the use of artificial intelligence (AI) in edge computing to make these booming advancements an accessible reality for more organizations.

Enterprise IT complexity is growing exponentially, fueled by the rapid adoption of new technologies like AI. This growth affects both the applications Red Hat develops and the environments in which they operate, according to Gunnar Hellekson, VP and GM for Red Hat Enterprise Linux.

“While more complexity can impact the attack surface, we are committed to making Red Hat Enterprise Linux the most secure, zero-trust platform on the market so businesses can tackle each challenge head-on with a secure base at the most fundamental levels of a system. This commitment enables the business to embrace the next wave of technology innovations,” he told LinuxInsider.

The release includes a collection of Red Hat Ansible Content subscriptions that automate everyday administrative tasks at scale. The latest version also adds several new system roles, including a new role for sudo, a command-line utility in Linux, to automate the configuration of sudo at scale.

By leveraging this capability, users can execute commands typically reserved for administrators while proper guardrails ensure rules are managed effectively. With automation, users with elevated privileges can implement sudo configurations securely and consistently across their environments, helping organizations reduce complexity and improve operational efficiency.

Increased platform support for confidential computing enables data protection for AI workloads and lowers the attack surface for insider threats. By preventing potential threats from viewing or tampering with sensitive data, confidential computing allows enterprises to have more opportunities to use AI more securely to review large amounts of data while still maintaining data segmentation and adhering to data compliance regulations.

The Image Builder feature advances a “shift left” approach by integrating security testing and vulnerability fixes earlier in the development cycle. This methodology delivers pre-hardened image configurations to customers, enhancing security while reducing setup time. The benefit of these built-in capabilities is the ability to configure without being security experts.

Management tools simplify system administration. Users can automate manual tasks, standardize deployment at scale, and reduce system complexities.

New file management capabilities in the web console allow routine file management tasks without using the command line, simplifying actions such as browsing the file system, uploading and downloading files, changing permissions, and creating directories.

Another benefit addresses cloud computing storage. Container-native innovation at the platform level fully supports Podman 5.0, the latest version of the open-source container engine. It gives developers a powerful tool for building, managing, and running containers in Linux environments.

According to Greg Macatee, research manager for infrastructure software platforms and worldwide infrastructure research at IDC, companies using the new RHEL release validated that the platform simplified management while reducing overall system complexity.

“They also noted that it radically reduced the time required for patching while simplifying kernel modifications and centralizing policy controls. They further called out the value of automation, better scalability, and access to Red Hat Enterprise Linux expertise,” he told LinuxInsider.

Application streams provide the latest curated developer tools, languages, and databases needed to fuel innovative applications. Red Hat Enterprise Linux 9.5 includes PG Vector for PostgreSQL, new versions of node.js, GCC toolset, Rust toolset, and LLVM toolset.

While Java Dev Kit (JDK) 11 reached its end of maintenance in RHEL 9, this new release continues supporting customers using it. The new default JDK 17 brings new features and tools for building and managing modern Java applications while maintaining backward compatibility to keep JDK upgrades consistent for applications and users.

The Fedora Project calls its community operating system Fedora Workstation. It provides a polished Linux OS for laptop and desktop computers and a complete set of tools for developers and consumers at all experience levels.

Fedora Server provides a flexible OS for users needing the latest data center technologies. The community also has Fedora IoT for foundation ecosystems, Fedora Cloud edition, and Fedora CoreOS for container-focused operations.

According to Miller’s announcement in the online Fedora Magazine, Fedora 41 includes updates to thousands of packages, ranging from tiny patches to extensive new features. These include a new major release of the command-line package management tool, DNF (Dandified YUM), which improves performance and enhances dependency resolution.

The Workstation edition offers various desktop environment options, known as spins, including Xfce, LxQt, and Cinnamon. It also introduces a new line of Atomic-flavored desktops, which streamline updates by bundling them into a single image that users install, eliminating the need to download multiple package updates.

Fedora Workstation 41 is based on Gnome 47. One of its main changes is its customization potential. In the Appearance setting, you can change the standard blue accent color of Gnome interfaces, choosing from an assortment of vibrant colors. Enhanced small-screen support helps users with lower-resolution screens see optimized icons scaled for easier interaction and better visibility on smaller screens, and new-style dialogue windows enhance usability across many screen sizes.

The merger of two popular open-source communities could sharpen the focus on bolstering online privacy and web-surfing anonymity.

The Amnesic Incognito Live System, or Tails, and the anonymity network Tor Project, short for The Onion Router, announced in late September a merger to unite operations and resources from both software communities into a single entity. The merger started in late 2023 when the Tails leadership needed a solution to having maxed out operational funds. Both entities decided to share the common goal of online anonymity.

A joint statement explained that the merger solved expansion and operational issues for both parties. Tails developers could avoid independently expanding operational capacity by combining with the Tor Project’s “larger and established operational framework.”

“By joining forces, the Tails team can now focus on their core mission of maintaining and improving Tails OS, exploring more and complementary use cases while benefiting from the larger organizational structure of The Tor Project,” according to the announcement.

The merger is significant as it unifies two similar projects, enhancing resources and efficiency in developing robust online privacy tools, according to Jason Soroko, senior fellow at Sectigo, a comprehensive certificate lifecycle management firm. He views this merger as substantially impacting privacy concerns by improving tools that better protect users from surveillance and data misuse.

“Increased focus on internet privacy is essential, and open-source projects should lead in providing transparent, collaborative solutions to safeguard personal data,” he told LinuxInsider.

Tor and Tails are most often used together, noted Soroko. Tails is a live Linux operating system that routes all internet traffic through the Tor network by default. Tor routes traffic through multiple volunteer-operated nodes, making it much harder to trace.

Tails, a free live operating system, ensures that no data is stored on the device after use, providing a secure environment that leaves no traces. This security measure is essential for users in high-risk situations, such as journalists, activists, or whistleblowers who need to protect their identities and activities from surveillance and censorship, he explained.

Both organizations aim to protect users from surveillance and censorship over the internet. Tails already used the Tor network to enhance online privacy. The free Tor browser, a tool frequently used to navigate the dark web, remains hidden from visited websites to avoid third-party trackers and ads.

Tor is an independent web browser that connects users to the internet through a proxy server, enabling anonymous connections from IP addresses that cannot be linked to a specific service or individual.

Casey Ellis, founder and advisor at crowdsourced cybersecurity firm Bugcrowd, agreed that this is an interesting move that makes a lot of sense. Sharing the business infrastructure will free up the Tails team, and the core group will have the time and opportunity to focus on the evolving needs of a privacy-focused OS like Tails.

“This merger involving an operating system such as Tails was long-awaited … Hopefully, this move broadens awareness of and contribution to the maintenance and improvement of both projects,” he told LinuxInsider.

In enterprises that approve web traffic monitoring for security and use of unapproved software and operating systems, this definitely will have privacy concerns. However, for legitimate and privacy-focused users, this will be a boon, offered Mayuresh Dani, manager of security research for the Threat Research Unit at Qualys.

“Protection against the abuse of personal data should definitely be one of the topmost pillars in protecting enterprises,” he told LinuxInsider.

In today’s interconnected world, everyone has an app for everything. All these apps share data with their creators as analytics to improve their services or products.

“Most of us are not aware of the information being collected at all. If a threat actor gains access to this personalized information, then a lot of attacks are possible,” he added.

Growing concerns about internet data abuse are impacting both consumers and enterprises, suggested Arjun Bhatnagar, co-founder and CEO of privacy company Cloaked.

“The demand for privacy as a response to a growing abuse of personal data is rapidly becoming one of the most important issues of our time,” he told LinuxInsider.

Strong interest is mounting in understanding how to tackle the abuse of data. This is prevalent with consumers actively disengaging by deactivating online accounts to businesses facing potential bankruptcy (e.g., 23andMe) due to reputation loss after a data breach.

This growing privacy concern is rooted in high-profile data breaches, manipulative AI algorithms, misuse of data for targeted advertising, unethical data-sharing practices, and intrusive surveillance by governments and corporations, which are entering daily discourse among individuals, Bhatnagar detailed.

He agreed that the merger, by uniting two trusted privacy tools, could strengthen efforts to protect users from surveillance and data exploitation.

“With shared resources, the merged entity can offer an integrated, more robust defense against surveillance and data abuse,” he said.

According to Bhatnagar, the privacy focus should be on ensuring transparency, accountability, and robust security practices, regardless of the model. As privacy threats increase, both open-source and proprietary solutions must commit to prioritizing user data protection.

“Ultimately, the priority should be creating solutions that empower users to maintain control over their data while minimizing the risks of exploitation and abuse,” he urged.

While some in the business and technology industries push for software developers to take an active role in safeguarding online privacy, others in the software development field argue that resolving the abuse of personal data on the internet is above their pay grade.

Decisions about personal data collection are typically made by product managers, who determine what data to collect, guided by relevant laws and guidelines.

However, they are incentivized to collect as much as possible, argued Brian Behlendorf, a technologist, computer programmer, and leading figure in the open-source software movement. He also is the general manager of the Open Source Security Foundation (OSSF).

“Other business roles inside a typical company decide with whom and how to share data and then direct engineers to implement,” he told LinuxInsider.

Behlendorf argued that, in most cases, software developers are not usually empowered to create software that minimizes the collection or sharing of personal data. Their convictions about this might not outweigh their employers’ designs and mandates; he offered as a personal opinion.

He emphasized that his comments do not represent OSSF (which is hosted by The Linux Foundation) nor affiliations with Mozilla or the Electronic Frontier Foundation (EFF).

“On a personal level, software developers I know are as angry as average citizens are about the ways their data is being used today,” Behlendorf continued.

Developers are even more sensitive to what happens beneath the user interface layer and the potential for that abuse, he added.

Tor helps ensure your web traffic is difficult for anyone, including nation-state actors, to trace. Tails helps lock down your local computing environment in ways that make it difficult for even government-sponsored actors to hack.

Behlendorf noted that these are tools that average consumers could use, and the more consumer usage they get, the better. The most compelling use cases are for users engaged in sensitive work.

People in the human rights and journalism communities already know about Tor, but a much smaller number know about Tails.

“Hopefully, this merger helps that crowd with intense needs also start to use Tails and benefit from its security promises and reassures them that the organizations behind these two pieces are better resourced,” he offered.

“More broadly, among the consumer public, I don’t see as much impact, though I hope it creates positive pressure among the more popular social network apps to be more secure by default.”

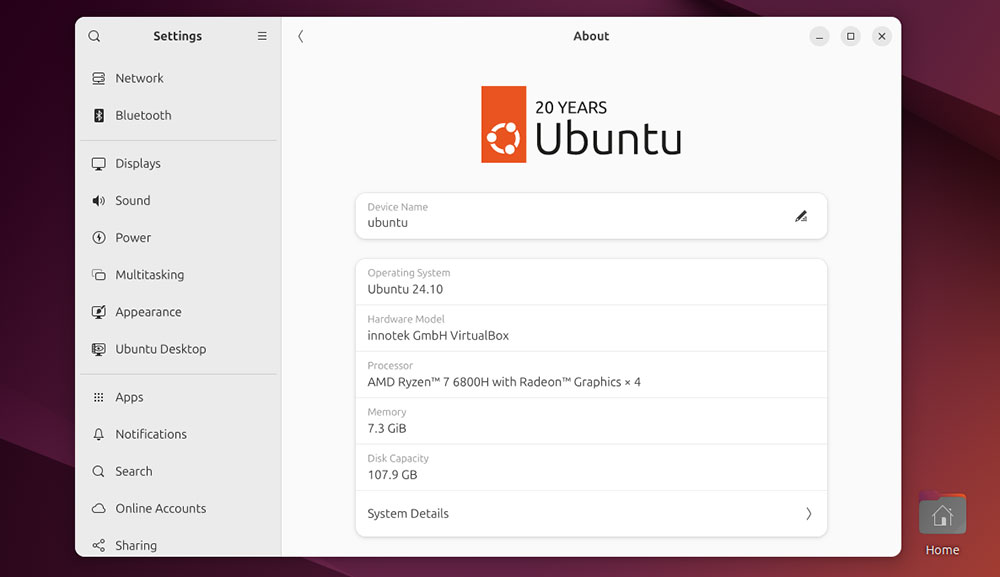

Canonical released Ubuntu 24.10 “Oracular Oriole” on Oct. 10, 2024. This latest version brings performance improvements and enhanced hardware support while also marking a special milestone — 20 years since Ubuntu’s first stable release.

As the name suggests, Ubuntu 24.04 LTS (Long-Term Support) is still being supported and will continue to receive five years of free security maintenance updates.

In this guide, you’ll learn how to upgrade your existing Ubuntu 24.04 installation to the latest Ubuntu 24.10.

As Ubuntu 24.04 is an LTS release, there’s no requirement to upgrade since it will be supported for at least five years. You can extend this for another five years through an Ubuntu Pro subscription, which is free for personal devices. Paid subscribers who install the Legacy Support add-on can benefit from an additional two years of coverage.

Ubuntu 24.10 will receive security and maintenance for just nine months out of the box. However, it is also compatible with Ubuntu Pro and comes with several upgrades.

For instance, Ubuntu now ships with the latest available version of the upstream Linux kernel. The kernel version is determined by Ubuntu’s freeze date, even if the kernel is still in Release Candidate (RC) status. This approach allows users to benefit from the latest features, though it may come at the possible expense of system stability.

The main flavor of Ubuntu 24.10 also ships with the latest Gnome 47 desktop, an upgraded App Center, an experimental Security Center, and better management of Snap packages.

See the official Oracular Oriole announcement to discover all the latest features in Ubuntu.

Ubuntu 22.04 supports upgrading via the command line or the built-in software updater.

The simplest way to upgrade is to launch the software updater to ensure you have the latest version of Ubuntu 22.04 (currently 22.04.01). Then, you can modify the updater settings to allow you to upgrade to a regular non-LTS release of Ubuntu.

Rerunning the software updater will display the option to upgrade your OS.

If you choose to proceed, the Ubuntu upgrade tool will connect to the relevant software channels and download the necessary packages.

Ubuntu disables third-party repositories and PPAs by default during upgrade. However, once the OS is upgraded to version 24.10, you can re-enable these via the command line.

Upgrading Ubuntu is a relatively simple process. Still, whenever making any significant changes to your OS, it’s best to take some steps to keep your data safe:

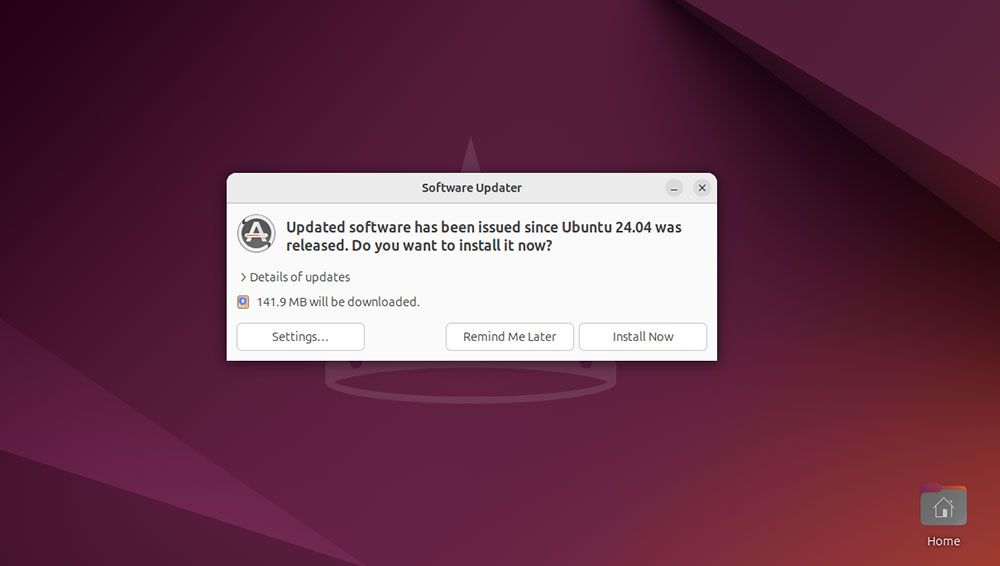

Next, check that your installation of Ubuntu 24.04 is fully up to date. The easiest way to do this is:

Your computer may need to restart to finish installing updates. If so, select “Restart Now” from the Software Updater menu to continue.

You can also update Ubuntu 24.04 via the command line. Open Terminal, then run:

sudo apt update && sudo apt full-upgrade

Press “Y” to confirm you wish to update the OS to the most recent version.

Once the system reboots, repeat the instructions outlined in the previous step to launch the Software Updater.

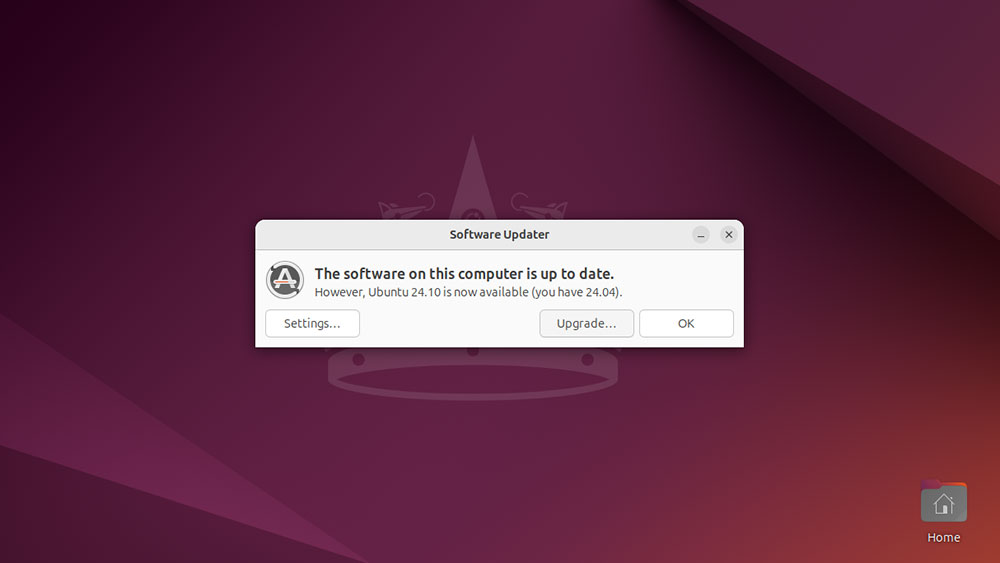

If further updates are available, download and apply them now before continuing. Once these have all been applied, you’ll see a Software Updater notification saying your system is up to date.

Choose “Settings” to launch “Software & Updates.” From here, select the “Updates” tab. In the dropdown menu labeled “Notify me of a new Ubuntu version,” choose “For any new version.” This means Ubuntu 24.10 will appear as an upgrade option, even though it’s not a Long-Term Support release.

Choose “Close,” then relaunch the Software Updater. You’ll now see a notification saying that Ubuntu 22.04 is up to date, but Ubuntu 24.10 is available. Choose “Upgrade” to continue.

If this notification doesn’t appear, you can also manually check for the latest version of Ubuntu via Terminal. Open the utility and run:

sudo update-manager -c

Once the Ubuntu upgrade utility launches, it will display a brief overview of everything new in Oracular Oriole. There’s also a link to the official release notes if you need a detailed breakdown.

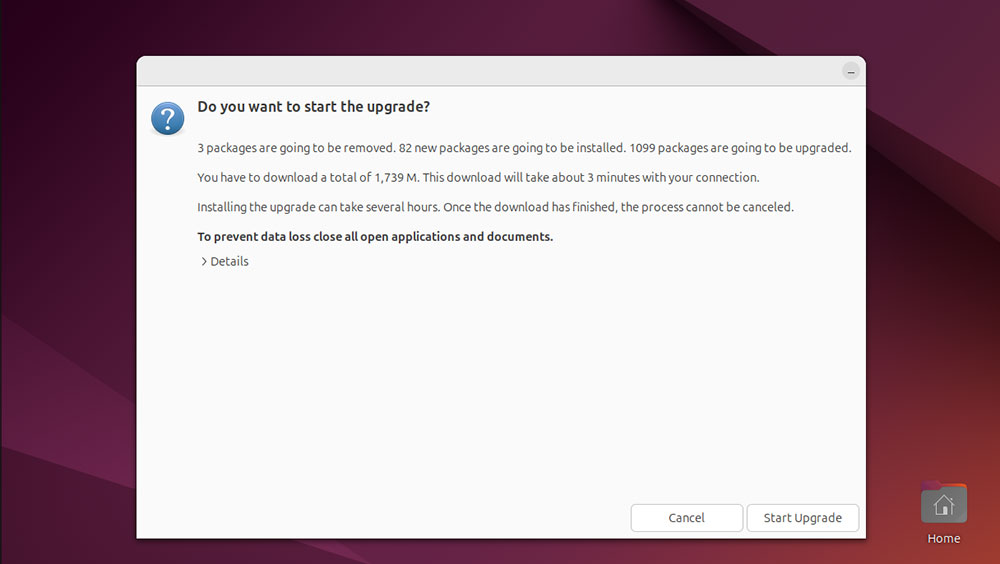

After reading through these, click “Start Upgrade” to continue. This will download the latest release upgrade tool.

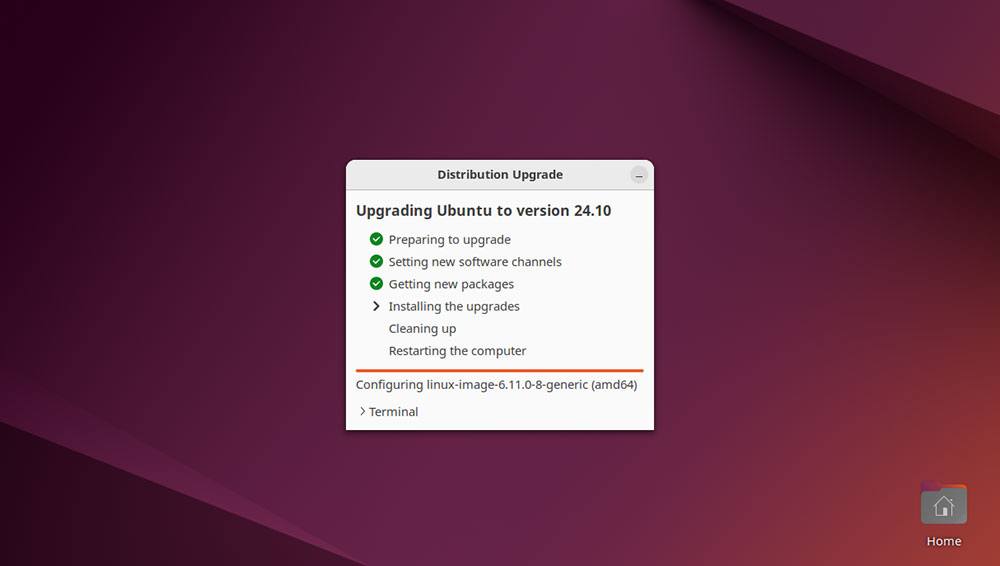

Next, the “Distribution Upgrade” will launch. You’ll notice a tilde next to “Preparing to Upgrade.” The tool will then update the OS software channels.

Once this is done, you’ll see a new window asking, “Do you want to start the upgrade?” Click “Details” for more information about the packages that will be changed/removed. Choose “Start Upgrade” to continue.

During the upgrade process, the system notified us several times that Oracular was not responding. We chose “Wait” in each case, and the upgrade continued without issue.

Once you start the upgrade, Ubuntu will inform you that the lock screen will be disabled during the process. Select “Close” to continue.

The upgrade tool will then begin downloading and installing the necessary packages. During this stage, the desktop wallpaper will change to the default background for Oracular Oriole.

You may also see a prompt regarding “libc6” during the upgrade, asking whether Ubuntu should restart services during package upgrades without asking. Click “Next” to confirm.

If you want more detailed information on the installation process, choose “Terminal.”

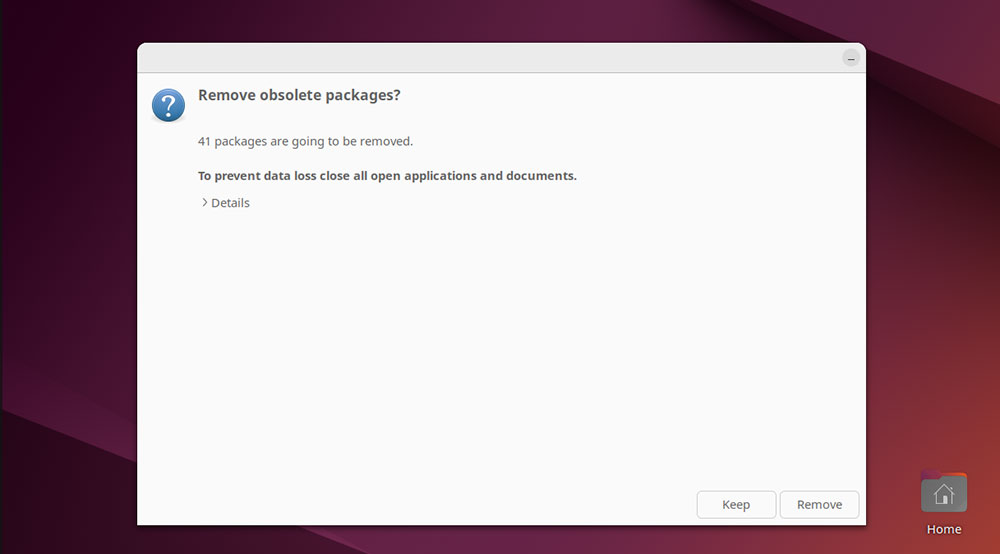

Once the installation stage is complete, the release upgrade tool will start cleaning up. A window asks if you wish to “Remove obsolete packages.”

Click on “Details” to view more information about these, then choose “Keep” or “Remove” as you see fit. If you’re uncertain, it’s usually best to remove these as they take up space on your hard drive.

Once you’ve dealt with the obsolete packages, Ubuntu will prompt you to restart the system to complete the upgrade.

After logging in to the Ubuntu desktop, you can verify that the upgrade has worked by opening “Activities” and launching “About” from System Settings. From here, you can view key information, including the operating system, which should now display Ubuntu 24.10.

If you prefer using the CLI to check your OS version, open Terminal and run:

lsb_release -a

The OS “Release” should read 24.10 if the upgrade has worked successfully.

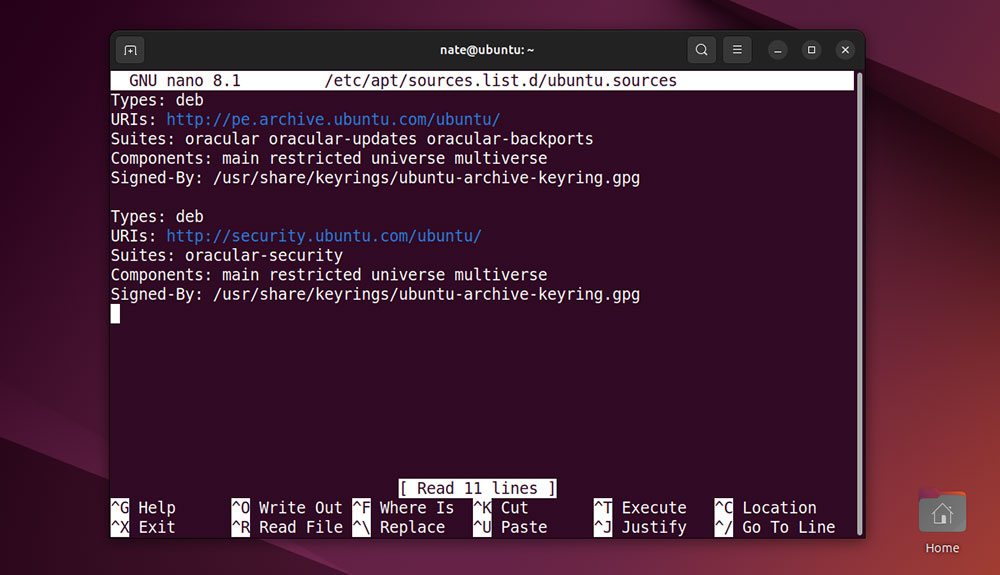

By default, whenever the Ubuntu OS is upgraded, any third-party repositories and/or PPAs are disabled. This ensures a smoother upgrade but also makes it difficult to update certain third-party software.

Luckily, you can easily enable the repos and PPAs again. To get started, open Terminal and run:

sudo nano etc/apt/sources.list.d/ubuntu.sources

This file lists all available software sources.

If you see any third-party repos listed, use the arrow keys to scroll down and remove ‘#’ at the beginning of the line. You can now save and exit the file using Ctrl + X, then Y.

These changes should take effect next time you run a system update.

Even if you didn’t need to enable any third-party repos or PPAs in the previous step, keeping your system up to date is always a good idea.

You can do this in Ubuntu 24.10 the same way as Ubuntu 22.04. As outlined in Step 1, simply open “Activities” and enter “software” to launch Software Updater.

Ubuntu 24.10 also supports updates via Terminal via the latest version of APT (3.0). To update this way, enter:

sudo apt-get update && sudo apt-get upgrade

We recommend using the Terminal for this first update as if any third-party repos/PPAs are disabled, you’ll see an alert.

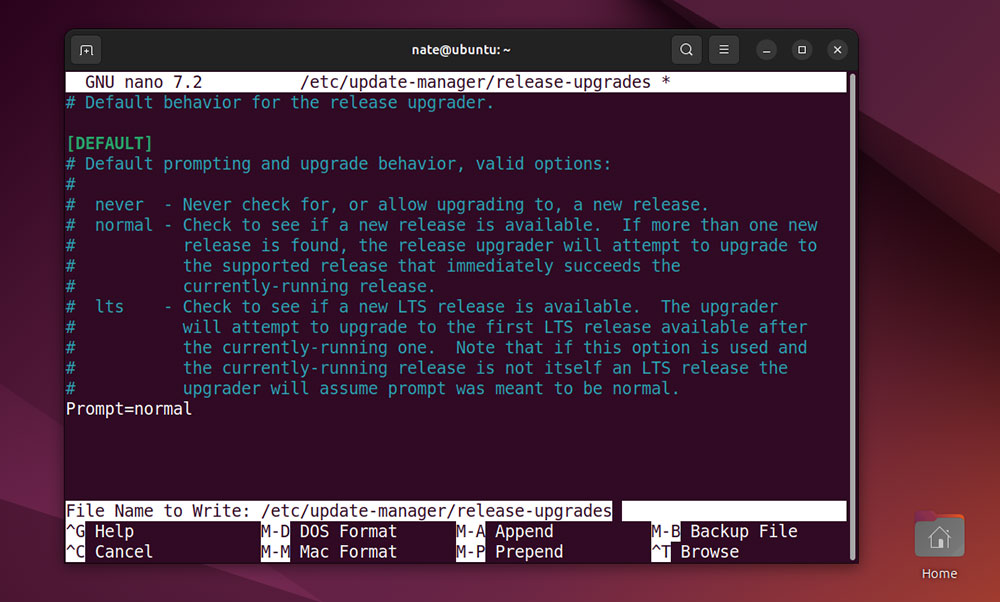

Experienced Linux users may want to upgrade Ubuntu via the command line. This can be much faster as there are no graphical windows to navigate.

To get started, you need to configure Ubuntu 22.04 to allow upgrades to non-LTS versions of the OS.

Open Terminal and run:

sudo nano /etc/update-manager/release-upgrades

From here, change Prompt=LTS to Prompt=normal. Press Ctrl + x, then y to save and exit.

Next, ensure that Ubuntu 22.04 is fully up to date by running:

sudo apt-get update && sudo apt-get upgrade

You can now launch the upgrade tool via the command line with:

sudo do-release-upgrade

Press ‘y’, then follow the on-screen prompts to complete the upgrade. You can also enter ‘y’ to confirm the removal of obsolete packages.

We hope you found this step-by-step upgrade guide for Ubuntu 24.10 helpful, allowing you to benefit from the latest features and superior performance with the latest version of the OS.

Editor’s Note: The Ubuntu images and screenshots featured in this article are credited to Canonical.

One of the most influential teachers I’ve had was my university music professor. One piece of wisdom particularly resonates with me: he asked, “How good would you be if you did everything your teacher told you to do?” His point was not that you should unquestioningly do what you’re told. Rather, he meant that after we’re familiar enough with a discipline, we know what yields improvement at it. We know what habits, procedures, and mindsets will make us better at what we do.

Of course, he wouldn’t have made this remark if we did consistently do what we knew we should. But why? There are many possible reasons, but I believe the most common is simply laziness.

Addressing all those fundamentals is hard work and not as fun as doing the flashy stuff. If you were building a skyscraper, would you prefer to cement the foundation or construct the penthouse level? We don’t appreciate that sturdy base unless we discover, often catastrophically, that it’s faulty.

In that spirit, I wanted to be a responsible engineer and examine our foundation. The following observations were compiled with software engineering in mind, as I identified them while working in that discipline. However, these practices apply universally. For each one, I have an example of when it proved the decisive factor for success.

Check every assumption. It is alarmingly easy to assume a “truth” on faith when, in reality, it is open to debate. Effective problem-solving starts by examining assumptions because the assumptions that survive your scrutiny will dictate which approaches remain viable. If you didn’t know your intended plan rested on an unfounded or invalid assumption, imagine how disastrously it would be to proceed anyway. Why take that gamble?

Recently, I worked on a project that involved aligning software deployment to an industry best practice. However, to meet specialized requirements, the project’s leadership relied on unique definitions of common terms, deviating ever so slightly from the standard industry definitions. If I had assumed the typical industry definitions, I would have missed some requirements.

Look up every term you don’t understand. When encountering an unfamiliar term, it’s easy to expect that we can intuit it from context or that it’s marginal enough in context that we can get the gist without it. Even if that’s true, how do you know until you check? How do you know you wouldn’t understand the topic on a much deeper level if you took five minutes to look it up?

When I initially embarked on my crash course in computer science, I didn’t read very deeply into object-oriented programming. “Yeah, yeah,” I thought, “everything is an object.” But once I took the time to explore the details, I learned what traits define object-oriented programming and how those traits, in turn, dictate how it can be applied. From there, I had enough background to start considering what problems an object-oriented approach is good and bad at solving.

Check the credibility of all your sources. I’m not telling you to only read the documentation (although it’s wise to start there), but you shouldn’t ascribe credibility to a source until you’ve checked it against information you already know is credible — like the documentation. I’ve written more than one piece about the difference between the commonly accepted way and the correct way. My recent article on Linux DNS configuration is a good example.

I constantly encounter wide gulfs between what the documentation advises and what developers on forums confidently assert. For instance, I had to generate RFC 4122-compliant UUIDs. In the particular language I was using, many developers recommended using a 3rd-party library. However, after careful research, I determined that the language’s built-in cryptographic library could already generate UUIDs. By omitting the third-party package, I reduced the number of dependencies as well as the attack surface for the application I was creating.

Take notes, then take some more notes. In the last year or so, I have made a point to capture the stray ideas, whether internalized or synthesized, instead of letting them dissipate. Since then, I’ve observed that I’m more productive and absorb more information even if I don’t reread my notes. The value of copious notes is intuitive: it takes much less time to write down too much and scan through everything than to write down too little and return to your sources to relocate what you omitted. With digital notes, it makes even more sense to record everything you read. A Ctrl-F search of even the most massive note file is far faster than retracing your investigation.

While researching how to configure and test some applications, I took notes on the criteria I used to select configurations. I noted everything on a private documentation page, even creating a flowchart to illustrate all my considerations. At the time, the page was only for checking and reinforcing my understanding. But once I realized that other developers would benefit from following in my footsteps, I already had a page ready.

Test everything you design or build. It is astounding how often testing gets skipped. A recent study showed that just under half of the time, information security professionals don’t audit major updates to their applications. It’s tempting to look at your application on paper and reason that it should be fine. But if everything worked like it did on paper, testing would never find any issues — yet so often it does. The whole point of testing is to discover what you didn’t anticipate. Because no one can foresee everything, the only way to catch what you didn’t is to test.

One time, I was writing a program to check a response payload that arbitrarily contained nested data structures of various types. While there is one overwhelmingly common data structure for the top level of such payloads, i.e., the payload root, I wrote the program to handle a variety of top-level data structures. However, I only added this flexibility after testing a wide range of payloads during development. Had I not tested with uncommon or unexpected inputs, the program could have encountered edge cases that induced a failure.

Why do I fixate on basics like this? I can think of two reasons.

First, I’ve seen the results for myself. Putting each of the above into consistent practice has improved the quality of my work, allowing me to tackle more complex problems. The work I’m doing now would be impossible without solidity on these basics.

Second, companies continue to squeeze out more productivity from their workforce by adopting the cutting-edge technology of the day, generative AI being merely the latest iteration of this trend. Don’t mistake the means for the ends: Companies don’t intrinsically want generative AI or any particular tool; they want increased productivity.

It’s too soon to say if generative AI will yield the quantum leap that so many seem to think it will. Before embracing a new paradigm, why not fully leverage an existing one? It’s way more efficient to improve on basics than add a new and specialized factor that may not remain relevant to the equation.

Regardless of your discipline, more of what you do on a regular basis draws on basic skills than highly advanced ones. This is the Pareto Principle (more commonly known as the “80/20 Rule”) in action: 20% of your skills toolkit accomplishes 80% of your work.

My current teacher likes to say that “mastery is just really refined basics.” Don’t overlook the road to mastery because of how unassuming it appears.

Fresh from the July 28 DebConf24 conference in South Korea comes a new open-source community project, eLxr. It is a Debian derivative with intelligent edge capabilities designed to simplify and enhance edge-to-cloud deployment strategies.

Edge computing distributions provide a framework that brings enterprise applications closer to data sources such as IoT devices or local edge servers. The eLxr project launched its first Debian derivative release, inheriting Debian’s intelligent edge capabilities. The community plans to expand these for a streamlined edge-to-cloud deployment approach.

As an open-source, enterprise-grade Linux distribution, eLxr addresses the unique challenges of near-edge networks and workloads. According to eLxr.org, this approach enhances eLxr’s distribution and strengthens Debian by expanding its feature set and improving its overall quality.

The eLxr project first launched in June as a community-driven effort dedicated to broadening access to cutting-edge technologies for both enthusiasts and enterprise users seeking a next-generation solution that scales from edge to cloud based on Debian Linux. The community’s mission is centered on accessibility, innovation, and maintaining the integrity of open-source software from a freely available Linux distribution.

According to Mark Asselstine, principal technologist at Wind River and eLxr, its latest version, Aria, is based on Debian 12 Bookworm. It follows Debian’s release path and adds its own versioning, with the first release being eLxr 12.6.1.0 — Aria.

Custom-embedded Linux OS Builder Wind River announced its involvement Tuesday by taking the community version of eLxr to a new level. It introduced eLxr Pro, a commercial enterprise Linux offering to address the unique needs of cloud-to-edge deployments. The recently launched open-source eLxr project distribution benefits from long-term commercial support and services provided by eLxr Pro.

Asselstine told LinuxInsider that eLxr has been and always will be a community-based project. It follows an upstream-first methodology and plans to contribute consistently to the Debian and Linux Foundation projects.

Wind River is a founding member of the eLxr community. The company contributed to the initial eLxr release. Other supporters are AWS, Capgemini, Intel, SAIC, and Supermicro.

Wind River developed the new commercial Linux enterprise OS version to address the unique needs of edge-to-cloud deployments, focusing on supporting AI and critical workloads.

The project enables organizations challenged with high-performance edge and enterprise needs to meet stringent performance and operational requirements for a wide range of markets and emerging use cases. These include autonomous vehicles, aerospace and defense, energy, finance, healthcare, industrial automation, smart cities, and telecom.

It supports x86_64-based hardware (optimized for UEFI 64 platforms), and eLxr also plans to include others, such as Arm64 and Arm System Ready.

Furthermore, eLxr Pro addresses the challenges of optimizing and deploying near- and far-edge applications to process data closer to where it is generated. It is designed for workloads that involve remote automatic updates, containerized applications, orchestration, inference-AI, machine learning, and autonomous operations.

Over the past decade, “build from source” solutions such as the Yocto Project and Buildroot gained favor for enabling various use cases at the intelligent edge. Those experiences led to eLxr.

Traditional methods of building embedded Linux devices offer extensive customizations and the ability to generate a software development kit (SDK), providing a cross-development toolchain. This allowed developers to maximize the performance of resource-constrained devices while offloading build tasks to more powerful machines.

The increasing connectivity demands of edge deployments included over-the-air (OTA) updates and new paradigms such as data aggregation, edge processing, predictive maintenance, and various machine learning features. Such complexities impose significant burdens — the need to monitor for CVEs and bugs, the use of additional SBOMs and diverse update cadences, and many other challenges.

Those issues created a need for a different architectural approach for both near-edge devices and servers. This requires using multiple distributions, creating a heterogeneous landscape of operating environments, and increasing complexity and cost.

This led to building eLxr as a Debian derivative with modern tools to ease maintenance while combining traditional installers with a new set of distro-to-order tools that allow a single distribution to better service edge and server deployment.

Coupled with a unified tech stack, this initiative offers a strategic advantage for enterprises aiming to optimize their edge deployments, create a seamless operating environment across devices, and set the foundation for future innovations in edge-to-cloud deployments. Existing enterprise solutions move more slowly than the speed that users require to innovate and rapidly adopt new technologies quickly.

The eLxr project chose Debian for two primary reasons. First, Debian’s staunch adherence to the open-source philosophy for over 30 years was a defining factor. Second, its embrace of derivative efforts fits the developmental needs.

Debian encourages the creation of new distributions and derivatives that help expand its reach into various use cases. Debian sees sharing experiences with derivatives as a way to broaden the community, improve the code for existing users, and make Debian suitable for a more diverse audience.

Developers built eLxr around Debian to attract a broad range of users and contributors who value innovation and community-driven development. The goal is to foster collaboration, transparency, and spreading new technologies.

This approach taps into Debian’s development lifecycle by introducing innovative new content that is not yet available in Debian. It also highlights eLxr’s agility and responsiveness to emerging needs.

A related mutual benefit is that contributions made by eLxr members remain accessible and beneficial to the broader Debian community over the long term.

According to Asselstine, Wind River and the eLxr project have a work-in-progress relationship. All content on eLxr.org and eLxr.dev is generated by public projects hosted on GitLab or upstream projects such as Debian.

Wind River is facilitating the initial stages. For example, it has provided for GitLab domain registration.

“Once a governance body, including treasury, is set up for the project, this relationship will adjust accordingly, allowing the project full control of project assets,” he explained.

Wind River Product Management initiated development work on the commercial version based on input from multiple sources, including users, customers, partners, other third parties, and internal entities. All work will be evaluated and prioritized for inclusion in the commercial version, ensuring timely delivery and maximum benefit to our commercial customers.

The community and Pro editions of eLxr Linux target several intended user scenarios. A key category is current users of existing Linux distributions such as CentOS or Ubuntu who complete installations from ISOs and rely on runtime system configuration tools such as cloud-init, puppet or ansible, or even manual configuration.

“The driving factor for these users is deciding to make this switch to avoid recent decisions such as CentOS’s move to a stream release, Ubuntu’s push to expand the use of snaps or Red Hat’s new source policies,” Asselstine said.

Enterprise supplier disruptions, such as the CentOS end of life in June 2024, have forced CIOs to reevaluate their Linux vendors. According to Wind River President Avijit Sinha, current options are often too limiting or overly complex, leading to implementations that do not meet the dynamic demands of this rapidly evolving segment.

This trend is driving the demand for seamless cloud-to-edge solutions that efficiently manage complex workloads, such as rapid data processing, AI, and machine learning, by reusing core operating system components and common codebases and frameworks.

“With eLxr Pro, customers deploying next-generation near-edge and enterprise server solutions are empowered to innovate using a free, open-source distribution optimized for performance, security, and ease of use. They can do so with confidence, knowing it is backed by commercial enterprise support, professional services, and integrated software tools,” said Sinha.

In the ever-crowded CRM market, vendors must differentiate themselves to stand out from competitors. Pipeliner CRM founder and CEO Nikolaus Kimla tested that principle when he was forced to rebuild his CRM platform around open source to create a modern and agile new system.

Through that four-year process, Kimla demonstrated that agile CRM is more sustainable and can seamlessly integrate new features and updates. The result is a system that helps users customize its features to fit their organization’s needs. Open-source technology creates a flexible, user-friendly platform for sales professionals, addressing all daily functions within the CRM environment.

“The most successful CRM structures demonstrate an ability to assess situations quickly and react intelligently. They are capable of staying agile considering rapidly evolving technology. They capitalize on opportunities like artificial intelligence, mitigate risks, and maintain a competitive edge in rapidly changing business environments,” Kimla told CRM Buyer.

Pipeliner built its original CRM on Adobe AIR and Flash technology, a combination that became the framework for one of the biggest gaming platforms. It was well suited for a highly graphical user interface, he noted.

Adobe transitioned its technology to another architecture as Flash technology died, presenting Kimla with the choice of going out of business, remaining static with existing out-of-date software, or innovating with new technology.

Rebuilding the platform from scratch with open-source components posed unavoidable challenges. These included maintaining customer service and retaining the same interface while reprogramming the backend over those four years.

The new platform offers advantages such as faster development, AI integration for better customer experience, and a more affordable pricing model. It also features a visual design framework, reduces onboarding time by 70%, and aims to make administration accessible to non-certified users.

Retaining existing customers was a priority, along with ensuring a smooth transition to the new platform.

“We had a lot of customers. And if you want not to lose them, one of the biggest problems is when you have a totally new interface because then the customer has to redo everything,” Kimla explained.

To solve that, he decided to recreate the same interface and bring the same navigation and appearance to the new application. That meant bringing the entire backend along as well.

“It was already very large. It took us four years of reprogramming, and you were basically out of business or still in business but with a reduced workload.”

Pipeliner CRM managed to remain in business through the rebuild process by paralleling its technology. Kimla described this parallel operation as one of the most exciting outcomes.

“We were able to do that and still grow the company, even if not big, while reprogramming everything. At one point, the old system was no longer actively developed but was still sold and used by customers. This was quite an endeavor,” he offered.

The new platform provided the latest technology. It gave Pipeliner CRM a significant edge over competitors such as Salesforce, Microsoft, and other large players whose systems were cumbersome and built on old legacy systems in many ways, like scripting languages, according to Kimla.