Hewlett Packard Enterprise on Tuesday announced it was open-sourcing The Machine to spur development of the infant computer design project.

HPE has invited the open source community to collaborate on its largest and most notable research project yet. The Machine focuses on reinventing the architecture underlying all computers built in the past 60 years.

The new design model switches to a memory-driven computing architecture. Bringing in open source developers early in the software development cycle will familiarize them with the fundamental shift, and could aid development of components of The Machine from the ground up.

However, it may be too early to tell how significant HPE’s move is. Open sourcing a new technology that is nothing more than a research project may have a chilling effect on community-building among developers.

The Machine is “not a commercial platform or solution. As such, I’m not sure how many commercial independent software developers outside of close HPE partners will want to spend time on it,” said Charles King, principal analyst at Pund-IT.

Potential for Success

HPE’s early decision to open source the initiative could have an impact similar to what IBM realized years ago with its Power processor, King told LinuxInsider.

“IBM’s decision to open source its Power processor architecture has attracted a sizable number of developers, silicon manufacturers and system builders. It would not surprise me if HPE found some inspiration in OpenPOWER’s success,” he said.

Regardless, the open source community will not be able to do much until it is widely available at a reasonable price, noted Rod Cope, CTO of Rogue Wave Software.

The Machine has awesome potential, but making it available to the OSS community will have little immediate effect, he said.

“The broader community will wait and see how powerful it is. Over time, however, there is no doubt that it will enable entirely different kinds of databases, proxies, security scanners and the like,” Cope told LinuxInsider.

Machine Primer

The Machine expands the boundaries of computer task performance. Powered by hundreds of petabytes of fast memory, it remembers the user’s history and integrates that knowledge to inform real-time situational decisions. Users can apply the results to predict, prevent and respond to future events, according to Bdale Garbee, HPE Fellow in the Office of HPE’s CTO.

HPE wants to change the 60-year-old computer model, which is limited in its ability to digest exponentially increasing amounts of new data. Within the next four years, 30 billion connected devices will generate unprecedented amounts of data.

Legacy computer systems cannot keep up. Hewlett Packard Labs wants to revolutionize the computer from the ground up, Garbee noted.

The Machine’s design strategy provides computers with a quantum leap in performance and efficiency by turning all big data into secure, actionable intelligence, using less energy and lowering costs. It does that by putting the data first, instead of processors.

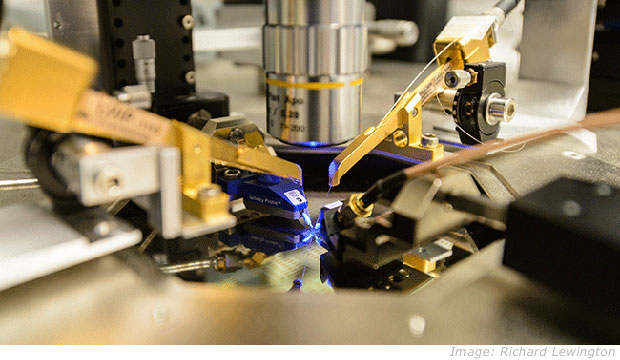

Its new memory-driven computing process collapses the memory and storage into one vast pool of universal memory. The Machine connects the memory and processing power using advanced photonic fabric. The use of light instead of electricity is key to rapidly accessing any part of the massive memory pool while using much less energy.

The goal is to use The Machine to broaden and impact technical innovations. That will provide new ways to extract knowledge and insights from large, complex collections of digital data with unprecedented scale and speed. Ultimately, the hope is that it will lead to solutions for some of the world’s most pressing technical, economic and social challenges.

Phase 1

HPE made available tools to assist developers in contributing to four code segments:

- Fast optimistic engine for data unification services — This new database engine will speed up applications by taking advantage of a large number of CPU cores and non-volatile memory.

- Fault-tolerant programming model for non-volatile memory — This process adapts existing multithreaded code to store and use data directly in persistent memory. It provides simple, efficient fault-tolerance in the event of power failures or program crashes.

- Fabric Attached Memory Emulation — This will create an environment designed to allow users to explore the new architectural paradigm of The Machine.

- Performance emulation for non-volatile memory bandwidth — This will be a DRAM-based performance emulation platform that leverages features available in commodity hardware to emulate different latency and bandwidth characteristics of future byte-addressable NVM technologies.

Benefits Package

Any new computing architecture faces hurdles in attracting industry supporters and interested customers. If HPE’s effort is a success, it could lower substantially some of the barriers The Machine is likely to encounter on its way to market, King said.

Problems like virus scanning, static code analysis, detecting the use of open source software, and grand challenges like simulating the brain and understanding the human genome will be changed forever by massive amounts of persistent memory and high bandwidth in-machine communication, said Rogue Wave’s Cope.

“Large project communities around Hadoop and related technologies will swarm on the potential game-changing capabilities,” he predicted. “This will be a big win for HPE and competitors working on similar solutions.”

This is what is interesting about Moore’s Law. Baically technology has hit the road-bump of only becoming faster while its overall structure remains the same.

To achieve the next level we need to reinvent the computer; evolve it. Too long we’ve relied on bytes and bits and boxes to put them in. Data is too large to rely on that anymore. Its like measuring the universe in millimeters. Its not how fast a computer thinks anymore, its about how it thinks.

Besides, I think The Machine sounds cooler than Skynet.