The aim of OpenAI, which launched last week, is to use artificial intelligence to benefit society. The fledgling project has drawn $1 billion in funding from some of high tech’s most recognizable names to help it achieve that goal.

The nonprofit organization seeks to “advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return,” CTO Greg Brockman and Research Director Ilya Sutskever wrote in a blog post.

Investors include SpaceX CEO Elon Musk, LinkedIn cofounder Reid Hoffman, PayPal cofounder Peter Thiel, Y Combinator founding partner Jessica Livingston and President Sam Altman, Amazon Web Services, and Infosys.

Altman and Musk will co-chair OpenAI.

The investors believe AI “should be an extension of individual human wills and, in the spirit of liberty, as broadly and evenly distributed as possible,” Brockman and Sutskever wrote.

What OpenAI Will Do

OpenAI’s researchers will be encouraged to publish their work, and any patents awarded will be shared with everyone. They will collaborate freely with both institutions and corporate entities.

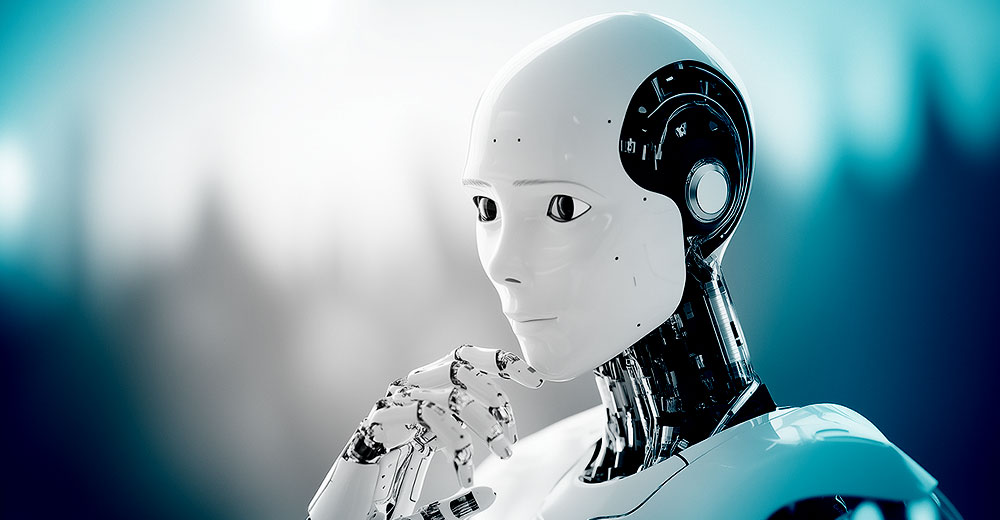

Today’s AI systems are outstanding when it comes to pattern recognition problems, but they’re limited to those. OpenAI plans to work to remove those constraints until computers eventually can reach human performance on virtually every intellectual task, Brockman and Sutskever wrote.

Doing so correctly could be of huge benefit — or it could cause incalculable harm. OpenAI’s investors consider it important to have a leading research institution that “can prioritize a good outcome for all over its own self-interest,” they said.

Who Decides?

OpenAI’s aims raise the question of who decides what’s going to benefit humanity.

“How many entities do we need to tell us what’s good for us?” asked Jim McGregor, principal analyst atTirias Research.

No one’s qualified to do so, he told LinuxInsider.

“Many of the advances in science and technology have been a result of coincidence,” McGregor pointed out. “So, how do you plan for innovation that meets certain moral requirements? This is a concern over Hollywood scenarios on what could happen.”

On the other hand, “even when focused on not doing evil, often excitement about the technology can blind the researchers to what’s prudent,” pointed out Rob Enderle, principal analyst at the Enderle Group.

Can Bias Be Avoided?

Pressure from corporations, technologists and governments, in the case ofencryption, influence the high-tech standards creation process, so what are the chances of OpenAI avoiding such pressures?

“This is open research, which pretty much means there’s going to be limits as to who’ll be able to contain what results,” Enderle told LinuxInsider.

“Believing you can create an open platform and then control the outcome is like Google believing they could create Android and not have Amazon steal it from them for the Kindle,” he said.

The Ethical Dilemma

Machine learning has to be open, and AI can’t be straitjacketed if it is to achieve its full potential, asserted Mukul Krishna, a global research director at Frost & Sullivan.

Once AI has evolved to the point where it can effectively stand on its own two legs and begins making decisions based on its calculations and extrapolations, “that will raise the ethical and philosophical question of what do you do,” he told LinuxInsider.

“Either you limit the algorithms to the data the AI has and what it will spit out so it’s just an analytic engine for pure data, and weed out anything in the data or algorithm that will allow it to look at the intangibles of data and abstract the concepts, or you may be moving towards creating a new intelligence, a conscious intelligence,” Krishna suggested.

“Then you have to figure out, are you ready to accept the question of untethered AI?” Krishna asked.

The specter of anuntethered AI has troubled Musk for some years now, and he has spoken out in public about its danger, as have several prominent experts.

However, “the only impact [OpenAI] will have is influence public opinion and, possibly, see regulation in certain countries,” McGregor said. “It won’t change the course of history or the path of innovation”